Abstract

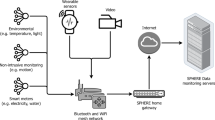

Advances in machine learning and contactless sensors have given rise to ambient intelligence—physical spaces that are sensitive and responsive to the presence of humans. Here we review how this technology could improve our understanding of the metaphorically dark, unobserved spaces of healthcare. In hospital spaces, early applications could soon enable more efficient clinical workflows and improved patient safety in intensive care units and operating rooms. In daily living spaces, ambient intelligence could prolong the independence of older individuals and improve the management of individuals with a chronic disease by understanding everyday behaviour. Similar to other technologies, transformation into clinical applications at scale must overcome challenges such as rigorous clinical validation, appropriate data privacy and model transparency. Thoughtful use of this technology would enable us to understand the complex interplay between the physical environment and health-critical human behaviours.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015). This paper reviews developments in deep learning and explains common neural network architectures such as convolutional and recurrent neural networks when applied to visual and natural language-processing tasks.

Jordan, M. I. & Mitchell, T. M. Machine learning: trends, perspectives, and prospects. Science 349, 255–260 (2015).

Esteva, A. et al. A guide to deep learning in healthcare. Nat. Med. 25, 24–29 (2019). This perspective describes the use of computer vision, natural language processing, speech recognition and reinforcement learning for medical imaging tasks, electronic health record analysis, robotic-assisted surgery and genomic research.

Topol, E. J. High-performance medicine: the convergence of human and artificial intelligence. Nat. Med. 25, 44–56 (2019). This review outlines how artificial intelligence is used by clinicians, patients and health systems to interpret medical images, find workflow efficiencies and promote patient self-care.

Sutton, R. T. et al. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit. Med. 3, 17 (2020).

Yeung, S., Downing, N. L., Fei-Fei, L. & Milstein, A. Bedside computer vision — moving artificial intelligence from driver assistance to patient safety. N. Engl. J. Med. 378, 1271–1273 (2018).

Haynes, A. B. et al. A surgical safety checklist to reduce morbidity and mortality in a global population. N. Engl. J. Med. 360, 491–499 (2009).

Makary, M. A. & Daniel, M. Medical error—the third leading cause of death in the US. Br. Med. J. 353, i2139 (2016).

Tallentire, V. R., Smith, S. E., Skinner, J. & Cameron, H. S. Exploring error in team-based acute care scenarios: an observational study from the United Kingdom. Acad. Med. 87, 792–798 (2012).

Yang, T. et al. Evaluation of medical malpractice litigations in China, 2002–2011. J. Forensic Sci. Med. 2, 185–189 (2016).

Pol, M. C., ter Riet, G., van Hartingsveldt, M., Kröse, B. & Buurman, B. M. Effectiveness of sensor monitoring in a rehabilitation programme for older patients after hip fracture: a three-arm stepped wedge randomised trial. Age Ageing 48, 650–657 (2019).

Fritz, R. L. & Dermody, G. A nurse-driven method for developing artificial intelligence in “smart” homes for aging-in-place. Nurs. Outlook 67, 140–153 (2019).

Kaye, J. A. et al. F5-05-04: ecologically valid assessment of life activities: unobtrusive continuous monitoring with sensors. Alzheimers Dement. 12, P374 (2016).

Acampora, G., Cook, D. J., Rashidi, P. & Vasilakos, A. V. A survey on ambient intelligence in health care. Proc IEEE Inst. Electr. Electron. Eng. 101, 2470–2494 (2013).

Cook, D. J., Duncan, G., Sprint, G. & Fritz, R. Using smart city technology to make healthcare smarter. Proc IEEE Inst. Electr. Electron. Eng. 106, 708–722 (2018).

Centers for Disease Control and Prevention. National Health Interview Survey: Summary Health Statistics https://www.cdc.gov/nchs/nhis/shs.htm (2018).

NHS Digital. Hospital Admitted Patient Care and Adult Critical Care Activity 2018–19 https://digital.nhs.uk/data-and-information/publications/statistical/hospital-admitted-patient-care-activity/2018-19 (NHS, 2019).

Patel, R. S., Bachu, R., Adikey, A., Malik, M. & Shah, M. Factors related to physician burnout and its consequences: a review. Behav. Sci. (Basel) 8, 98 (2018).

Lyon, M. et al. Rural ED transfers due to lack of radiology services. Am. J. Emerg. Med. 33, 1630–1634 (2015).

Adams, J. G. & Walls, R. M. Supporting the health care workforce during the COVID-19 global epidemic. J. Am. Med. Assoc. 323, 1439–1440 (2020).

Halpern, N. A., Goldman, D. A., Tan, K. S. & Pastores, S. M. Trends in critical care beds and use among population groups and Medicare and Medicaid beneficiaries in the United States: 2000–2010. Crit. Care Med. 44, 1490–1499 (2016).

Halpern, N. A. & Pastores, S. M. Critical care medicine in the United States 2000–2005: an analysis of bed numbers, occupancy rates, payer mix, and costs. Crit. Care Med. 38, 65–71 (2010).

Hermans, G. et al. Acute outcomes and 1-year mortality of intensive care unit-acquired weakness. A cohort study and propensity-matched analysis. Am. J. Respir. Crit. Care Med. 190, 410–420 (2014).

Zhang, L. et al. Early mobilization of critically ill patients in the intensive care unit: a systematic review and meta-analysis. PLoS ONE 14, e0223185 (2019).

Donchin, Y. et al. A look into the nature and causes of human errors in the intensive care unit. Crit. Care Med. 23, 294–300 (1995).

Hodgson, C. L., Berney, S., Harrold, M., Saxena, M. & Bellomo, R. Clinical review: early patient mobilization in the ICU. Crit. Care 17, 207 (2013).

Verceles, A. C. & Hager, E. R. Use of accelerometry to monitor physical activity in critically ill subjects: a systematic review. Respir. Care 60, 1330–1336 (2015).

Ma, A. J. et al. Measuring patient mobility in the ICU using a novel noninvasive sensor. Crit. Care Med. 45, 630–636 (2017).

Yeung, S. et al. A computer vision system for deep learning-based detection of patient mobilization activities in the ICU. NPJ Digit. Med. 2, 11 (2019). This study used computer vision to simultaneously categorize patient mobilization activities in intensive care units and count the number of healthcare personnel involved in each activity.

Davoudi, A. et al. Intelligent ICU for autonomous patient monitoring using pervasive sensing and deep learning. Sci. Rep. 9, 8020 (2019).This study used cameras and wearable sensors to track the physical movement of delirious and non-delirious patients in an intensive care unit.

WHO. Report on the Burden of Endemic Health Care-associated Infection Worldwide https://apps.who.int/iris/handle/10665/80135 (2011).

Vincent, J.-L. Nosocomial infections in adult intensive-care units. Lancet 361, 2068–2077 (2003).

Gould, D. J., Moralejo, D., Drey, N., Chudleigh, J. H. & Taljaard, M. Interventions to improve hand hygiene compliance in patient care. Cochrane Database Syst. Rev. 9, CD005186 (2017).

Srigley, J. A., Furness, C. D., Baker, G. R. & Gardam, M. Quantification of the Hawthorne effect in hand hygiene compliance monitoring using an electronic monitoring system: a retrospective cohort study. BMJ Qual. Saf. 23, 974–980 (2014).

Shirehjini, A. A. N., Yassine, A. & Shirmohammadi, S. Equipment location in hospitals using RFID-based positioning system. IEEE Trans. Inf. Technol. Biomed. 16, 1058–1069 (2012).

Sax, H. et al. ‘My five moments for hand hygiene’: a user-centred design approach to understand, train, monitor and report hand hygiene. J. Hosp. Infect. 67, 9–21 (2007).

Haque, A. et al. Towards vision-based smart hospitals: a system for tracking and monitoring hand hygiene compliance. In Proc. 2nd Machine Learning for Healthcare Conference 75–87 (PMLR, 2017). This study evaluated the performance of depth sensors and covert auditors at measuring hand hygiene compliance in a hospital unit.

Singh, A. et al. Automatic detection of hand hygiene using computer vision technology. J. Am. Med. Inform. Assoc. https://doi.org/10.1093/jamia/ocaa115 (2020).

Chen, J., Cremer, J. F., Zarei, K., Segre, A. M. & Polgreen, P. M. Using computer vision and depth sensing to measure healthcare worker-patient contacts and personal protective equipment adherence within hospital rooms. Open Forum Infect. Dis. 3, ofv200 (2016).

Awwad, S., Tarvade, S., Piccardi, M. & Gattas, D. J. The use of privacy-protected computer vision to measure the quality of healthcare worker hand hygiene. Int. J. Qual. Health Care 31, 36–42 (2019).

Weiser, T. G. et al. An estimation of the global volume of surgery: a modelling strategy based on available data. Lancet 372, 139–144 (2008).

Anderson, O., Davis, R., Hanna, G. B. & Vincent, C. A. Surgical adverse events: a systematic review. Am. J. Surg. 206, 253–262 (2013).

Bonrath, E. M., Dedy, N. J., Gordon, L. E. & Grantcharov, T. P. Comprehensive surgical coaching enhances surgical skill in the operating room: a randomized controlled trial. Ann. Surg. 262, 205–212 (2015).

Vaidya, A. et al. Current status of technical skills assessment tools in surgery: a systematic review. J. Surg. Res. 246, 342–378 (2020).

Ghasemloonia, A. et al. Surgical skill assessment using motion quality and smoothness. J. Surg. Educ. 74, 295–305 (2017).

Khalid, S., Goldenberg, M., Grantcharov, T., Taati, B. & Rudzicz, F. Evaluation of deep learning models for identifying surgical actions and measuring performance. JAMA Netw. Open 3, e201664 (2020).

Law, H., Ghani, K. & Deng, J. Surgeon Technical skill assessment using computer vision based analysis. In Proc. 2nd Machine Learning for Healthcare Conference 88–99 (PMLR, 2017).

Jin, A. et al. Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In Proc. Winter Conference on Applications of Computer Vision 691–699 (IEEE, 2018).

Twinanda, A. P. et al. EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 36, 86–97 (2017).

Hashimoto, D. A., Rosman, G., Rus, D. & Meireles, O. R. Artificial intelligence in surgery: promises and perils. Ann. Surg. 268, 70–76 (2018).

Greenberg, C. C., Regenbogen, S. E., Lipsitz, S. R., Diaz-Flores, R. & Gawande, A. A. The frequency and significance of discrepancies in the surgical count. Ann. Surg. 248, 337–341 (2008).

Agrawal, A. Counting matters: lessons from the root cause analysis of a retained surgical item. Jt. Comm. J. Qual. Patient Saf. 38, 566–574 (2012).

Hempel, S. et al. Wrong-site surgery, retained surgical items, and surgical fires: a systematic review of surgical never events. JAMA Surg. 150, 796–805 (2015).

Cima, R. R. et al. Using a data-matrix-coded sponge counting system across a surgical practice: impact after 18 months. Jt. Comm. J. Qual. Patient Saf. 37, 51–58 (2011).

Rupp, C. C. et al. Effectiveness of a radiofrequency detection system as an adjunct to manual counting protocols for tracking surgical sponges: a prospective trial of 2,285 patients. J. Am. Coll. Surg. 215, 524–533 (2012).

Kassahun, Y. et al. Surgical robotics beyond enhanced dexterity instrumentation: a survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int. J. Comput. Assist. Radiol. Surg. 11, 553–568 (2016).

Kadkhodamohammadi, A., Gangi, A., de Mathelin, M. & Padoy, N. A multi-view RGB-D approach for human pose estimation in operating rooms. In Proc. Winter Conference on Applications of Computer Vision 363–372 (IEEE, 2017).

Jung, J. J., Jüni, P., Lebovic, G. & Grantcharov, T. First-year analysis of the operating room black box study. Ann. Surg. 271, 122–127 (2020).

Joukes, E., Abu-Hanna, A., Cornet, R. & de Keizer, N. F. Time spent on dedicated patient care and documentation tasks before and after the introduction of a structured and standardized electronic health record. Appl. Clin. Inform. 9, 46–53 (2018).

Heaton, H. A., Castaneda-Guarderas, A., Trotter, E. R., Erwin, P. J. & Bellolio, M. F. Effect of scribes on patient throughput, revenue, and patient and provider satisfaction: a systematic review and meta-analysis. Am. J. Emerg. Med. 34, 2018–2028 (2016).

Rich, N. The impact of working as a medical scribe. Am. J. Emerg. Med. 35, 513 (2017).

Boulton, C. How Google Glass automates patient documentation for dignity health. Wall Street Journal (16 June 2014).

Blackley, S. V., Huynh, J., Wang, L., Korach, Z. & Zhou, L. Speech recognition for clinical documentation from 1990 to 2018: a systematic review. J. Am. Med. Inform. Assoc. 26, 324–338 (2019).

Chiu, C.-C. et al. Speech recognition for medical conversations. In Proc. 18th Annual Conference of the International Speech Communication Association 2972–2976 (ISCA, 2018). This paper developed a speech-recognition algorithm to transcribe anonymized conversations between patients and clinicians.

Pranaat, R. et al. Use of simulation based on an electronic health records environment to evaluate the structure and accuracy of notes generated by medical scribes: proof-of-concept study. JMIR Med. Inform. 5, e30 (2017).

Kaplan, R. S. et al. Using time-driven activity-based costing to identify value improvement opportunities in healthcare. J. Healthc. Manag. 59, 399–412 (2014).

Porter, M. E. Value-based health care delivery. Ann. Surg. 248, 503–509 (2008).

Keel, G., Savage, C., Rafiq, M. & Mazzocato, P. Time-driven activity-based costing in health care: a systematic review of the literature. Health Policy 121, 755–763 (2017).

French, K. E. et al. Measuring the value of process improvement initiatives in a preoperative assessment center using time-driven activity-based costing. Healthcare 1, 136–142 (2013).

Sánchez, D., Tentori, M. & Favela, J. Activity recognition for the smart hospital. IEEE Intelligent Systems 23, 50–57 (2008).

United Nations. World Population Ageing 2019 https://www.un.org/development/desa/pd/sites/www.un.org.development.desa.pd/files/files/documents/2020/Jan/un_2019_worldpopulationageing_report.pdf (2020).

Mamikonian-Zarpas, A. & Laganá, L. The relationship between older adults’ risk for a future fall and difficulty performing activities of daily living. J. Aging Gerontol. 3, 8–16 (2015).

Stineman, M. G. et al. All-cause 1-, 5-, and 10-year mortality in elderly people according to activities of daily living stage. J. Am. Geriatr. Soc. 60, 485–492 (2012).

Phelan, E. A., Williams, B., Penninx, B. W. J. H., LoGerfo, J. P. & Leveille, S. G. Activities of daily living function and disability in older adults in a randomized trial of the health enhancement program. J. Gerontol. A 59, M838–M843 (2004).

Carlsson, G., Haak, M., Nygren, C. & Iwarsson, S. Self-reported versus professionally assessed functional limitations in community-dwelling very old individuals. Int. J. Rehabil. Res. 35, 299–304 (2012).

Wang, Z., Yang, Z. & Dong, T. A review of wearable technologies for elderly care that can accurately track indoor position, recognize physical activities and monitor vital signs in real time. Sensors 17, 341 (2017).

Katz, S. Assessing self-maintenance: activities of daily living, mobility, and instrumental activities of daily living. J. Am. Geriatr. Soc. 31, 721–727 (1983).

Uddin, M. Z., Khaksar, W. & Torresen, J. Ambient sensors for elderly care and independent living: a survey. Sensors 18, 2027 (2018).

Luo, Z. et al. Computer vision-based descriptive analytics of seniors’ daily activities for long-term health monitoring. In Proc. 3rd Machine Learning for Healthcare Conference 1–18 (PMLR, 2018). This study created spatial and temporal summaries of activities of daily living using a depth and thermal sensor inside the bedroom of an older resident.

Cheng, H., Liu, Z., Zhao, Y., Ye, G. & Sun, X. Real world activity summary for senior home monitoring. Multimedia Tools Appl. 70, 177–197 (2014).

Lee, M.-T., Jang, Y. & Chang, W.-Y. How do impairments in cognitive functions affect activities of daily living functions in older adults? PLoS ONE 14, e0218112 (2019).

Chen, J., Zhang, J., Kam, A. H. & Shue, L. An automatic acoustic bathroom monitoring system. In Proc. International Symposium on Circuits and Systems 1750–1753 (IEEE, 2005).

Shrestha, A. et al. Elderly care: activities of daily living classification with an S band radar. J. Eng. 2019, 7601–7606 (2019).

Ganz, D. A. & Latham, N. K. Prevention of falls in community-dwelling older adults. N. Engl. J. Med. 382, 734–743 (2020).

Bergen, G., Stevens, M. R. & Burns, E. R. Falls and fall injuries among adults aged ≥65 years — United States, 2014. MMWR Morb. Mortal. Wkly. Rep. 65, 993–998 (2016).

Wild, D., Nayak, U. S. & Isaacs, B. How dangerous are falls in old people at home? Br. Med. J. (Clin. Res. Ed.) 282, 266–268 (1981).

Scheffer, A. C., Schuurmans, M. J., van Dijk, N., van der Hooft, T. & de Rooij, S. E. Fear of falling: measurement strategy, prevalence, risk factors and consequences among older persons. Age Ageing 37, 19–24 (2008).

Pol, M. et al. Older people’s perspectives regarding the use of sensor monitoring in their home. Gerontologist 56, 485–493 (2016).

Erol, B., Amin, M. G. & Boashash, B. Range-Doppler radar sensor fusion for fall detection. In Proc. IEEE Radar Conference 819–824 (IEEE, 2017).

Chaudhuri, S., Thompson, H. & Demiris, G. Fall detection devices and their use with older adults: a systematic review. J. Geriatr. Phys. Ther. 37, 178–196 (2014).

Tegou, T. et al. A low-cost indoor activity monitoring system for detecting frailty in older adults. Sensors 19, 452 (2019).

Rantz, M. et al. Automated in-home fall risk assessment and detection sensor system for elders. Gerontologist 55, S78–S87 (2015).

Su, B. Y., Ho, K. C., Rantz, M. J. & Skubic, M. Doppler radar fall activity detection using the wavelet transform. IEEE Trans. Biomed. Eng. 62, 865–875 (2015).

Stone, E. E. & Skubic, M. Fall detection in homes of older adults using the Microsoft Kinect. IEEE J. Biomed. Health Inform. 19, 290–301 (2015).

Rantz, M. et al. Randomized trial of intelligent sensor system for early illness alerts in senior housing. J. Am. Med. Dir. Assoc. 18, 860–870 (2017). This randomized trial investigated the clinical efficacy of a real-time intervention system—triggered by abnormal gait patterns, as detected by ambient sensors—on the walking ability of older individuals at home.

Kwolek, B. & Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Programs Biomed. 117, 489–501 (2014).

Wren, T. A. L., Gorton, G. E. III, Ounpuu, S. & Tucker, C. A. Efficacy of clinical gait analysis: a systematic review. Gait Posture 34, 149–153 (2011).

Wren, T. A. et al. Outcomes of lower extremity orthopedic surgery in ambulatory children with cerebral palsy with and without gait analysis: results of a randomized controlled trial. Gait Posture 38, 236–241 (2013).

Del Din, S. et al. Gait analysis with wearables predicts conversion to Parkinson disease. Ann. Neurol. 86, 357–367 (2019).

Kidziński, Ł., Delp, S. & Schwartz, M. Automatic real-time gait event detection in children using deep neural networks. PLoS ONE 14, e0211466 (2019).

Díaz, S., Stephenson, J. B. & Labrador, M. A. Use of wearable sensor technology in gait, balance, and range of motion analysis. Appl. Sci. 10, 234 (2020).

Juen, J., Cheng, Q., Prieto-Centurion, V., Krishnan, J. A. & Schatz, B. Health monitors for chronic disease by gait analysis with mobile phones. Telemed. J. E Health 20, 1035–1041 (2014).

Kononova, A. et al. The use of wearable activity trackers among older adults: focus group study of tracker perceptions, motivators, and barriers in the maintenance stage of behavior change. JMIR Mhealth Uhealth 7, e9832 (2019).

Da Gama, A., Fallavollita, P., Teichrieb, V., & Navab, N. Motor rehabilitation using Kinect: a systematic review. Games Health J. 4, 123–135 (2015).

Cho, C.-W., Chao, W.-H., Lin, S.-H. & Chen, Y.-Y. A vision-based analysis system for gait recognition in patients with Parkinson’s disease. Expert Syst. Appl. 36, 7033–7039 (2009).

Seifert, A., Zoubir, A. M. & Amin, M. G. Detection of gait asymmetry using indoor Doppler radar. In Proc. IEEE Radar Conference 1–6 (IEEE, 2019).

Altaf, M. U. B., Butko, T., Juang, B. H. & Juang, B.-H. Acoustic gaits: gait analysis with footstep sounds. IEEE Trans. Biomed. Eng. 62, 2001–2011 (2015).

Galna, B. et al. Accuracy of the Microsoft Kinect sensor for measuring movement in people with Parkinson’s disease. Gait Posture 39, 1062–1068 (2014).

Jaume-i-Capó, A., Martínez-Bueso, P., Moyà-Alcover, B. & Varona, J. Interactive rehabilitation system for improvement of balance therapies in people with cerebral palsy. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 419–427 (2014).

Tinetti, M. E., Williams, T. F. & Mayewski, R. Fall risk index for elderly patients based on number of chronic disabilities. Am. J. Med. 80, 429–434 (1986).

Wang, C. et al. Multimodal gait analysis based on wearable inertial and microphone sensors. In Proc. IEEE SmartWorld 1–8 (2017).

Mental Health America. Mental Health in America - Adult Data 2018 https://www.mhanational.org/issues/mental-health-america-adult-data-2018 (2018).

Wittchen, H. U. et al. The size and burden of mental disorders and other disorders of the brain in Europe 2010. Eur. Neuropsychopharmacol. 21, 655–679 (2011).

Snowden, L. R. Bias in mental health assessment and intervention: theory and evidence. Am. J. Public Health 93, 239–243 (2003).

Shatte, A. B. R., Hutchinson, D. M. & Teague, S. J. Machine learning in mental health: a scoping review of methods and applications. Psychol. Med. 49, 1426–1448 (2019).

Chakraborty, D. et al. Assessment and prediction of negative symptoms of schizophrenia from RGB+ D movement signals. In Proc. 19th International Workshop on Multimedia Signal Processing 1–6 (2017).

Pestian, J. P. et al. A controlled trial using natural language processing to examine the language of suicidal adolescents in the emergency department. Suicide Life Threat. Behav. 46, 154–159 (2016).

Lutz, W., Leon, S. C., Martinovich, Z., Lyons, J. S. & Stiles, W. B. Therapist effects in outpatient psychotherapy: a three-level growth curve approach. J. Couns. Psychol. 54, 32–39 (2007).

Miner, A. S. et al. Assessing the accuracy of automatic speech recognition for psychotherapy. NPJ Digit. Med. 3, 82 (2020).

Xiao, B., Imel, Z. E., Georgiou, P. G., Atkins, D. C. & Narayanan, S. S. “Rate my therapist”: automated detection of empathy in drug and alcohol counseling via speech and language processing. PLoS ONE 10, e0143055 (2015).

Ewbank, M. P. et al. Quantifying the association between psychotherapy content and clinical outcomes using deep learning. JAMA Psychiatry 77, 35–43 (2020).

Sadeghian, A., Alahi, A. & Savarese, S. Tracking the untrackable: learning to track multiple cues with long-term dependencies. In Proc. Conference on Computer Vision and Pattern Recognition 300–311 (IEEE, 2017).

Liu, G. et al. Image inpainting for irregular holes using partial convolutions. In Proc. 15th European Conference on Computer Vision 89–105 (Springer, 2018).

Marafioti, A., Perraudin, N., Holighaus, N. & Majdak, P. A context encoder for audio inpainting. IEEE/ACM Trans. Audio Speech Lang. Process. 27, 2362–2372 (2019).

Chen, Y., Tian, Y. & He, M. Monocular human pose estimation: a survey of deep learning-based methods. Comput. Vis. Image Underst. 192, 102897 (2020).

Krishna, R. et al. Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 123, 32–73 (2017).

Johnson, J. et al. Image retrieval using scene graphs. In Proc. Conference on Computer Vision and Pattern Recognition 3668–3678 (IEEE, 2015).

Shi, J., Zhang, H. & Li, J. Explainable and explicit visual reasoning over scene graphs. In Proc. Conference on Computer Vision and Pattern Recognition 8368–8376 (IEEE/CVF, 2019).

Halamka, J. D. Early experiences with big data at an academic medical center. Health Aff. 33, 1132–1138 (2014).

Verbraeken, J. et al. A survey on distributed machine learning. ACM Comput. Surv. 53, 30 (2020).

You, Y. et al. Large batch optimization for deep learning: training BERT in 76 minutes. In Proc. 8th International Conference on Learning Representations 1–38 (2020).

Kitaev, N., Kaiser, Ł. & Levskaya, A. Reformer: the efficient transformer. In Proc. 8th International Conference on Learning Representations 1–12 (2020).

Heilbron, F., Niebles, J. & Ghanem, B. Fast temporal activity proposals for efficient detection of human actions in untrimmed videos. In Proc. Conference on Computer Vision and Pattern Recognition 1914–1923 (IEEE, 2016).

Zhu, Y., Lan, Z., Newsam, S. & Hauptmann, A. Hidden two-stream convolutional networks for action recognition. In Proc. 14th Asian Conference on Computer Vision 363–378 (Springer, 2019).

Han, S., Mao, H. & Dally, W. J. Deep compression: compressing deep neural networks with pruning, trained quantization and Huffman coding. In Proc. 4th International Conference on Learning Representations 1–14 (2016). This paper introduced a method to compress neural network models and reduce their computational and storage requirements.

Micikeviciusd, P. et al. Mixed precision training. In Proc. 6th International Conference on Learning Representations 1–12 (2018).

Yu, G. & Yuan, J. Fast action proposals for human action detection and search. In Proc. Conference on Computer Vision and Pattern Recognition 1302–1311 (IEEE, 2015).

Zou, J. & Schiebinger, L. AI can be sexist and racist — it’s time to make it fair. Nature 559, 324–326 (2018).

Pleiss, G., Raghavan, M., Wu, F., Kleinberg, J. & Weinberger, K. Q. On fairness and calibration. Adv. Neural Inf. Process. Syst. 30, 5680–5689 (2017).

Neyshabur, B., Bhojanapalli, S., McAllester, D. & Srebro, N. Exploring generalization in deep learning. Adv. Neural Inf. Process. Syst. 30, 5947–5956 (2017).

Howard, J. & Ruder, S. Universal language model fine-tuning for text classification. In Proc. 56th Annual Meeting of the Association for Computational Linguistics 328–339 (2018).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2010).

Patel, V. M., Gopalan, R., Li, R. & Chellappa, R. Visual domain adaptation: a survey of recent advances. IEEE Signal Process. Mag. 32, 53–69 (2015).

Wang, Y., Kwok, J., Ni, L. M. & Yao, Q. Generalizing from a few examples: a survey on few-shot learning. ACM Comput. Surv. 53, 63 (2020).

Jobin, A., Ienca, M. & Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399 (2019).

Li, C., Lubecke, V. M., Boric-Lubecke, O. & Lin, J. A review on recent advances in Doppler radar sensors for noncontact healthcare monitoring. IEEE Trans. Microw. Theory Tech. 61, 2046–2060 (2013).

Rockhold, F., Nisen, P. & Freeman, A. Data sharing at a crossroads. N. Engl. J. Med. 375, 1115–1117 (2016).

Wiens, J. et al. Do no harm: a roadmap for responsible machine learning for health care. Nat. Med. 25, 1337–1340 (2019).

El Emam, K., Jonker, E., Arbuckle, L. & Malin, B. A systematic review of re-identification attacks on health data. PLoS ONE 6, e28071 (2011).

Nasrollahi, K. & Moeslund, T. Super-resolution: a comprehensive survey. Mach. Vis. Appl. 25, 1423–1468 (2014).

Brewster, T. How an amateur rap crew stole surveillance tech that tracks almost every American. Forbes Magazine (12 October 2018).

Cutler, J. E. How can patients make money off their medical data? Bloomberg Law (29 January 2019).

Cahan, E. M., Hernandez-Boussard, T., Thadaney-Israni, S. & Rubin, D. L. Putting the data before the algorithm in big data addressing personalized healthcare. NPJ Digit. Med. 2, 78 (2019).

Rajkomar, A., Hardt, M., Howell, M. D., Corrado, G. & Chin, M. H. Ensuring fairness in machine learning to advance health equity. Ann. Intern. Med. 169, 866–872 (2018).

Char, D. S., Shah, N. H. & Magnus, D. Implementing machine learning in health care — addressing ethical challenges. N. Engl. J. Med. 378, 981–983 (2018).

Buolamwini, J. & Gebru, T. Gender shades: intersectional accuracy disparities in commercial gender classification. In Proc. 1st Conference on Fairness, Accountability and Transparency 77–91 (2018).

Chen, I. Y., Szolovits, P. & Ghassemi, M. Can AI help reduce disparities in general medical and mental health care? AMA J. Ethics 21, E167–E179 (2019).

Wolff, R. F. et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann. Intern. Med. 170, 51–58 (2019).

Murdoch, W. J., Singh, C., Kumbier, K., Abbasi-Asl, R. & Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl Acad. Sci. USA 116, 22071–22080 (2019). This article proposed a framework for evaluating model interpretability through predictive accuracy, descriptive accuracy and relevancy.

He, J. et al. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 25, 30–36 (2019).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. M. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Ann. Intern. Med. 162, 55–63 (2015).

Mitchell, M. et al. Model cards for model reporting. In Proc. 2nd Conference on Fairness, Accountability, and Transparency 220–229 (2019).

Thomas, R. et al. Deliberative democracy and cancer screening consent: a randomised control trial of the effect of a community jury on men’s knowledge about and intentions to participate in PSA screening. BMJ Open 4, e005691 (2014).

Otto, J. L., Holodniy, M. & DeFraites, R. F. Public health practice is not research. Am. J. Public Health 104, 596–602 (2014).

Gerke, S., Yeung, S. & Cohen, I. G. Ethical and legal aspects of ambient intelligence in hospitals. J. Am. Med. Assoc. 323, 601–602 (2020).

Kim, J. W., Jang, B. & Yoo, H. Privacy-preserving aggregation of personal health data streams. PLoS ONE 13, e0207639 (2018).

van der Maaten, L., Postma, E. & van den Herik, J. Dimensionality reduction: a comparative. J. Mach. Learn. Res. 10, 13 (2009).

Kocabas, M., Athanasiou, N. & Black, M. J. VIBE: video inference for human body pose and shape estimation. In Proc. Conference on Computer Vision and Pattern Recognition 5253–5263 (IEEE/CVF, 2020).

McMahan, H. B., Moore, E., Ramage, D., Hampson, S. & Arcas, B. A. Communication-efficient learning of deep networks from decentralized data. In Proc. 20th International Conference on Artificial Intelligence and Statistics 1273–1282 (PMLR, 2017). This paper proposed federated learning, a method for training a shared model while the data is distributed across multiple client devices.

Gentry, C. Fully homomorphic encryption using ideal lattices. In Proc 41st Symposium on Theory of Computing 169–178 (ACM, 2009). This paper proposed the first fully homomorphic encryption scheme that supports addition and multiplication on encrypted data.

McCoy, S. T. Aboard USNS Comfort (US Navy, 2003).

Acknowledgements

We thank A. Kaushal, D. C. Magnus, G. Burke, K. Schulman and M. Hutson for providing comments on this paper. We also thank our clinical collaborators over the years, including A. S. Miner, A. Singh, B. Campbell, D. F. Amanatullah, F. R. Salipur, H. Rubin, J. Jopling, K. Deru, N. L. Downing, R. Nazerali, T. Platchek and W. Beninati, and our technical collaborators over the years, including A. Alahi, A. Rege, B. Liu, B. Peng, D. Zhao, E. Chou, E. Adeli, G. M. Bianconi, G. Pusiol, H. Cai, J. Beal, J.-T. Hsieh, M. Guo, R. Mehra, S. Mehra, S. Yeung and Z. Luo. A.H.’s graduate work was partially supported by the US Office of Naval Research (grant N00014-16-1-2127) and the Stanford Institute for Human-Centered Artificial Intelligence.

Author information

Authors and Affiliations

Contributions

A.H., A.M. and L.F-F. conceptualized the paper and its structure. A.H. and L.F.-F. wrote the paper. A.H. created the figures. A.M. provided substantial additions and edits. All authors contributed to multiple parts of the paper, as well as the final style and overall content.

Corresponding author

Ethics declarations

Competing interests

A.M. has financial interests in Prealize Health. L.F.-F. and A.M. have financial interests in Dawnlight Technologies. A.H. declares no competing interests.

Additional information

Peer review information Nature thanks Andrew Beam, Eric Topol and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

This file contains Supplementary Note 1.

Rights and permissions

About this article

Cite this article

Haque, A., Milstein, A. & Fei-Fei, L. Illuminating the dark spaces of healthcare with ambient intelligence. Nature 585, 193–202 (2020). https://doi.org/10.1038/s41586-020-2669-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-020-2669-y